Threading

Introduction

This chapter explains how to use PhysX in multithreaded applications. There are three main aspects to using PhysX with multiple threads:

how to make read and write calls into the PhysX API from multiple threads without causing race conditions.

how to use multiple threads to accelerate simulation processing.

how to perform asynchronous simulation, and read and write to the API while simulation is being processed.

Data Access from Multiple Threads

For efficiency reasons, PhysX does not internally lock access to its data structures by the application, so be careful when calling the API from multiple application threads. The rules are as follows:

API interface methods marked ‘const’ are read calls, other API interface methods are write calls.

API read calls may be made simultaneously from multiple threads.

Objects in different scenes may be safely accessed by different threads.

Different objects outside a scene may be safely accessed from different threads. Be aware that accessing an object may indirectly cause access to another object via a persistent reference (such as joints and actors referencing one another, an actor referencing a shape, or a shape referencing a mesh.)

Access patterns which do not conform to the above rules may result in data corruption, deadlocks, or crashes. Note in particular that it is not legal to perform a write operation on an object in a scene concurrently with a read operation to an object in the same scene. The checked build contains code which tracks access by application threads to objects within a scene, to try and detect problems at the point when the illegal API call is made.

Scene Locking

Each PxScene object provides a multiple reader, single writer lock that can be used to control access to the scene by multiple threads. This is useful for situations where the PhysX scene is

shared between more than one independent subsystem. The scene lock provides a way for these systems to coordinate with each other without creating direct dependencies.

It is not mandatory to use the lock. If all access to the scene is from a single thread, using the lock adds unnecessary overhead. Even if the scene is accessed from multiple threads, the threads may be synchronized using a simpler or more efficient application-specific mechanism that guarantees that the application meets the above conditions. However, using the scene lock has the following potential benefit:

If the

PxSceneFlag::eREQUIRE_RW_LOCKis set, the checked build will issue a warning for any API call made without first acquiring the lock, or if a write call is made when the lock has only been acquired for read.

There are four methods for acquiring / releasing the lock:

void PxScene::lockRead(const char* file=NULL, PxU32 line=0);

void PxScene::unlockRead();

void PxScene::lockWrite(const char* file=NULL, PxU32 line=0);

void PxScene::unlockWrite();

Additionally there is an RAII helper class to manage these locks, see PxSceneLock.h.

Locking Semantics

There are precise rules regarding the usage of the scene lock:

Multiple threads may read at the same time.

Only one thread may write at a time, no thread may write if any threads are reading.

If a thread holds a write lock then it may call both read and write API methods.

Re-entrant read locks are supported, meaning a lockRead() on a thread that has already acquired a read lock is permitted. Each lockRead() must have a paired unlockRead().

Re-entrant write locks are supported, meaning a lockWrite() on a thread that has already acquired a write lock is permitted. Each lockWrite() must have a paired unlockWrite().

Calling lockRead() by a thread that has already acquired the write lock is permitted and the thread will continue to have read and write access. Each lock*() must have an associated unlock*() that occurs in reverse order.

Lock upgrading is not supported - a lockWrite() by a thread that has already acquired a read lock is not permitted. Attempting this in checked builds will result in an error, in release builds it will lead to deadlock.

Writers are favored - if a thread attempts a lockWrite() while the read lock is acquired it will be blocked until all readers leave. If new readers arrive while the writer thread is blocked they will be put to sleep and the writer will have first chance to access the scene. This prevents writers being starved in the presence of multiple readers.

If multiple writers are queued then the first writer will receive priority, subsequent writers will be granted access according to OS scheduling.

Note: PxScene::release() automatically attempts to acquire the write lock, it is not necessary to acquire it manually before calling release().

Locking Best Practices

It is often useful to have the application acquire the lock a single time to perform multiple operations. This minimizes the overhead of the lock, and in addition can prevent cases such as a sweep test in one thread seeing a rag doll that has been only partially inserted by another thread.

Clustering writes can also help reduce contention for the lock, as acquiring the lock for write will stall any other thread trying to perform a read access.

Asynchronous Simulation

PhysX simulation is asynchronous by default. Start simulation by calling:

scene->simulate(dt);

When this call returns, the simulation step has begun in a separate thread (if the CPU dispatcher has at least one thread in the thread pool). While simulation is running, it is illegal to modify the scene or any actor or shape in the scene.

To wait until simulation completes, call:

scene->fetchResults(true);

The boolean parameter to fetchResults denotes whether the call should wait for simulation to complete, or return immediately with the current completion status. See the API documentation for more detail.

It is important to distinguish two time slots for data access:

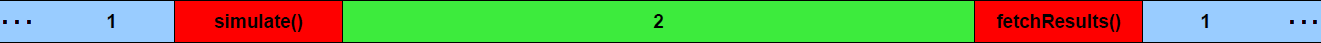

After the call to PxScene::fetchResults() has returned and before the next PxScene::simulate() call (see figure below, blue area “1”).

After the call to PxScene::simulate() has returned and before the corresponding PxScene::fetchResults() call (see figure below, green area “2”).

In the first time slot, the simulation is not running and there are no restrictions for reading or writing object properties. Changes to the position of an object, for example, are applied instantaneously and the next scene query or simulation step will take the new state into account.

In the second time slot the simulation is running and, in the process, reading and changing the state of objects. Concurrent access from the user might corrupt the state of the objects or lead to data races or inconsistent views in the simulation code. Hence, the simulation code’s view of the objects must not be interrupted by API writes.

Note that simulate() and fetchResults() are write calls on the scene, and as such it is illegal to access any object in the scene while these functions are running.

Double Buffering

Since PhysX 5.0, double-buffering is not supported anymore. It is not allowed to add, remove or modify scene objects while the simulation is running.

Multithreaded Simulation

PhysX includes a task system for managing compute resources. Tasks are created with dependencies so that they are resolved in a given order, when ready they are then submitted to a user-implemented dispatcher for execution.

Middleware products typically do not want to create CPU threads for their own use. This is especially true on consoles where execution threads can have significant overhead. In the task model, the computational work is broken into jobs that are submitted to the application’s thread pool as they become ready to run.

The following classes comprise the CPU task management.

TaskManager

A TaskManager manages inter-task dependencies and dispatches ready tasks to their respective dispatcher. There is a dispatcher for CPU tasks assigned to the TaskManager.

TaskManagers are owned and created by the SDK. Each PxScene will allocate its own TaskManager instance which users can configure with dispatchers through either the PxSceneDesc or directly through the TaskManager interface.

CpuDispatcher

The CpuDispatcher is an abstract class the SDK uses for interfacing with the application’s thread pool. Typically, there will be one single CpuDispatcher for the entire application, since there is rarely a need for more than one thread pool. A CpuDispatcher instance may be shared by more than one TaskManager, for example if multiple scenes are being used.

PhysX includes a default CpuDispatcher implementation, but it is recommended for applications to implement this class themselves so PhysX can efficiently share CPU resources with the application.

Note

The TaskManager will call CpuDispatcher::submitTask() from either the context of API calls (aka: scene::simulate()) or from other running tasks, so the function must be thread-safe.

An implementation of the CpuDispatcher interface must call the following two methods on each submitted task for it to be run correctly:

baseTask->run();

baseTask->release();

The PxExtensions library has a default implementation of a CPU dispatcher. The following code snippet shows how the default dispatcher is created.

mNbThreads which is passed to PxDefaultCpuDispatcherCreate() defines how many worker threads the CPU dispatcher will have.:

PxSceneDesc sceneDesc(mPhysics->getTolerancesScale());

[...]

// create CPU dispatcher with mNbThreads worker threads

mCpuDispatcher = PxDefaultCpuDispatcherCreate(mNbThreads);

if(!mCpuDispatcher)

fatalError("PxDefaultCpuDispatcherCreate failed!");

sceneDesc.cpuDispatcher = mCpuDispatcher;

[...]

mScene = mPhysics->createScene(sceneDesc);

Note

Best performance is usually achieved if the number of threads is less than or equal to the available hardware threads of the platform to run on,

creating more worker threads than hardware threads will often lead to worse performance. For platforms with a single execution core, the CPU dispatcher

can be created with zero worker threads (PxDefaultCpuDispatcherCreate(0)). In this case all work will be executed on the thread that calls PxScene::simulate(),

which can be more efficient than using multiple threads.

CpuDispatcher Implementation Guidelines

After the scene’s TaskManager has found a ready-to-run task and submitted it to the appropriate dispatcher it is up to the dispatcher implementation to decide how and when the task will be run.

Often in game scenarios the rigid body simulation is time critical and the goal is to reduce the latency from simulate() to the completion of fetchResults(). The lowest possible latency will be achieved when the PhysX tasks have exclusive access to CPU resources during the update. In reality, PhysX will have to share compute resources with other application tasks. Below are some guidelines to help ensure a balance between throughput and latency when mixing the PhysX update with other work.

Avoid interleaving long running tasks with PhysX tasks, this will help reduce latency.

Avoid assigning worker threads to the same execution core as higher priority threads. If a PhysX task is context switched during execution the rest of the rigid body pipeline may be stalled, increasing latency.

PhysX occasionally submits tasks and then immediately waits for them to complete, because of this, executing tasks in LIFO (stack) order may perform better than FIFO (queue) order.

PhysX is not a perfectly parallel SDK, so interleaving small to medium granularity tasks will generally result in higher overall throughput.

If the thread pool has per-thread job-queues then queuing tasks on the thread they were submitted to may result in more optimal CPU cache coherence, however this is not required.

For more details see the default CpuDispatcher implementation that comes as part of the PxExtensions package. It uses worker threads that each have their own task queue and steal tasks from the back of other worker’s queues (LIFO order) to improve workload distribution.

BaseTask

BaseTask is the abstract base class for all task types. All task run() functions will be executed on application threads, so they need to be careful with their stack usage. As little stack as possible should be used, and tasks should never block for any reason.

Task

The Task class is the standard task type. Tasks must be submitted to the TaskManager each simulation step for them to be executed. Tasks may be named at submission time, this allows them to be discoverable. Tasks will be given a reference count of 1 when they are submitted, and the TaskManager::startSimulation() function decrements the reference count of all tasks and dispatches all Tasks whose reference count reaches zero. Before TaskManager::startSimulation() is called, Tasks can set dependencies on each other to control the order in which they are dispatched. Once simulation has started, it is still possible to submit new tasks and add dependencies, but it is up to the programmer to avoid race hazards. Dependencies can not be added to tasks that have already been dispatched, and newly submitted Tasks must have their reference count decremented before that Task will be allowed to execute.

Synchronization points can also be defined using Task names. The TaskManager will assign the name a TaskID with no Task implementation. When all of the named TaskID’s dependencies are met, it will decrement the reference count of all Tasks with that name.

LightCpuTask

LightCpuTask is another subclass of BaseTask that is explicitly scheduled by the programmer. LightCpuTasks have a reference count of 1 when they are initialized, so their reference count must be decremented before they are dispatched. LightCpuTasks increment their continuation task reference count when they are initialized, and decrement the reference count when they are released (after completing their run() function)

PhysX uses LightCpuTasks almost exclusively to manage CPU resources. For example, each stage of the simulation update may consist of multiple parallel tasks, when each of these tasks has finished execution it will decrement the reference count on the next task in the update chain. This will then be automatically dispatched for execution when its reference count reaches zero.

The following code snippets show how some agent A.I. is run as a CPU Task. The agent A.I. is run as a background Task in parallel with the PhysX simulation update.

For a CPU task that does not need handling of multiple continuations LightCpuTask can be subclassed. A LightCpuTask subclass requires that the getName and a run method be defined:

class Agent : public physx::PxLightCpuTask

{

public:

[...]

// Implements LightCpuTask

virtual const char* getName() const { return "Agent AI Task"; }

virtual void run();

[...]

}

After PxScene::simulate() has been called, and the simulation started, the application calls removeReference() on each Agent task, this in turn causes it to be submitted to the CpuDispatcher for update. Note that it is also possible to submit tasks to the dispatcher directly (without manipulating reference counts) as follows:

PxLightCpuTask& task = &mAgent;

mCpuDispatcher->submitTask(task);

Once queued for execution by the CpuDispatcher, one of the thread pool’s worker threads will eventually call the task’s run method. The Agent task might perform raycasts against the scene and update its internal state machine:

void Agent::run()

{

// run as a separate task/thread

scanForObstacles();

updateState();

}

It is safe to perform API read calls, such as scene queries, from multiple threads while simulate() is running. However, care must be taken not to overlap API read and write calls from multiple threads. In this case the SDK will issue an error, see Threading for more information.

An example for explicit reference count modification and task dependency setup:

// c shall start once a and b have run, d shall start after c

// a and b have no dependencies and shall run in parallel

//

// a

// \

// c - d

// /

// b

d.setContinuation(taskManager, NULL);

c.setContinuation(d);

a.setContinuation(c);

b.setContinuation(c);

// setup is done, now start all tasks by decrementing their refcount by 1

// tasks with refcount == 0 will be submitted to the dispatcher (a & b will start).

a.removeReference();

b.removeReference();

c.removeReference();

d.removeReference();